Anticipation / Expectation:

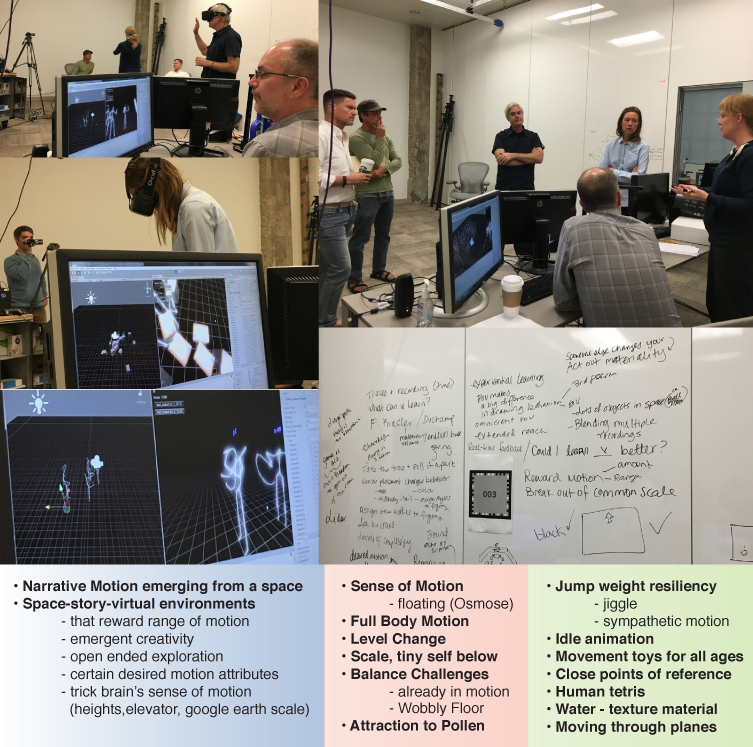

• To promote discussion and questions about full body engagement and motion in VR, capturing action with playback and real time drawing, and representation in VR spaces...

• To pose the question “what is this for?”

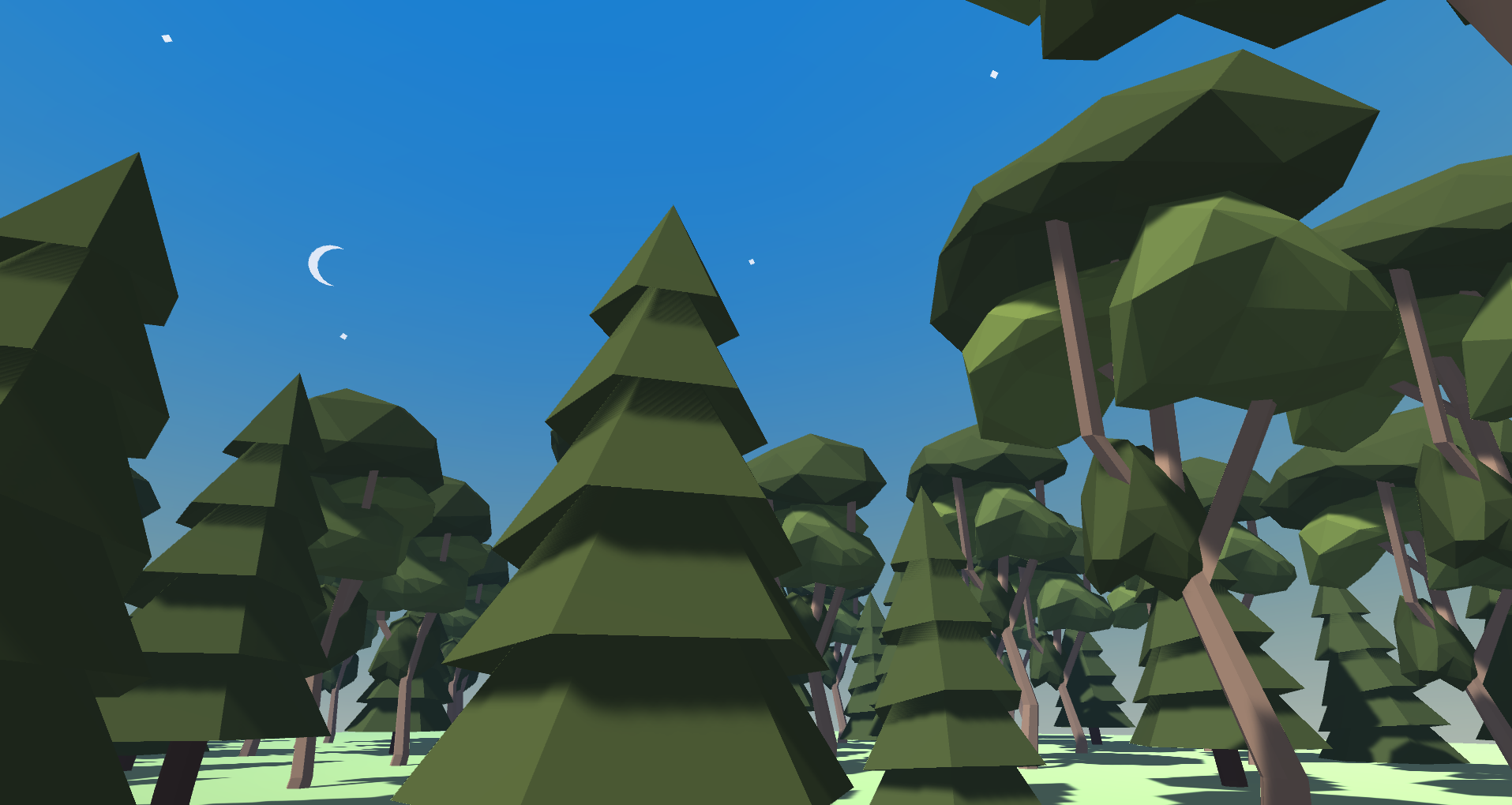

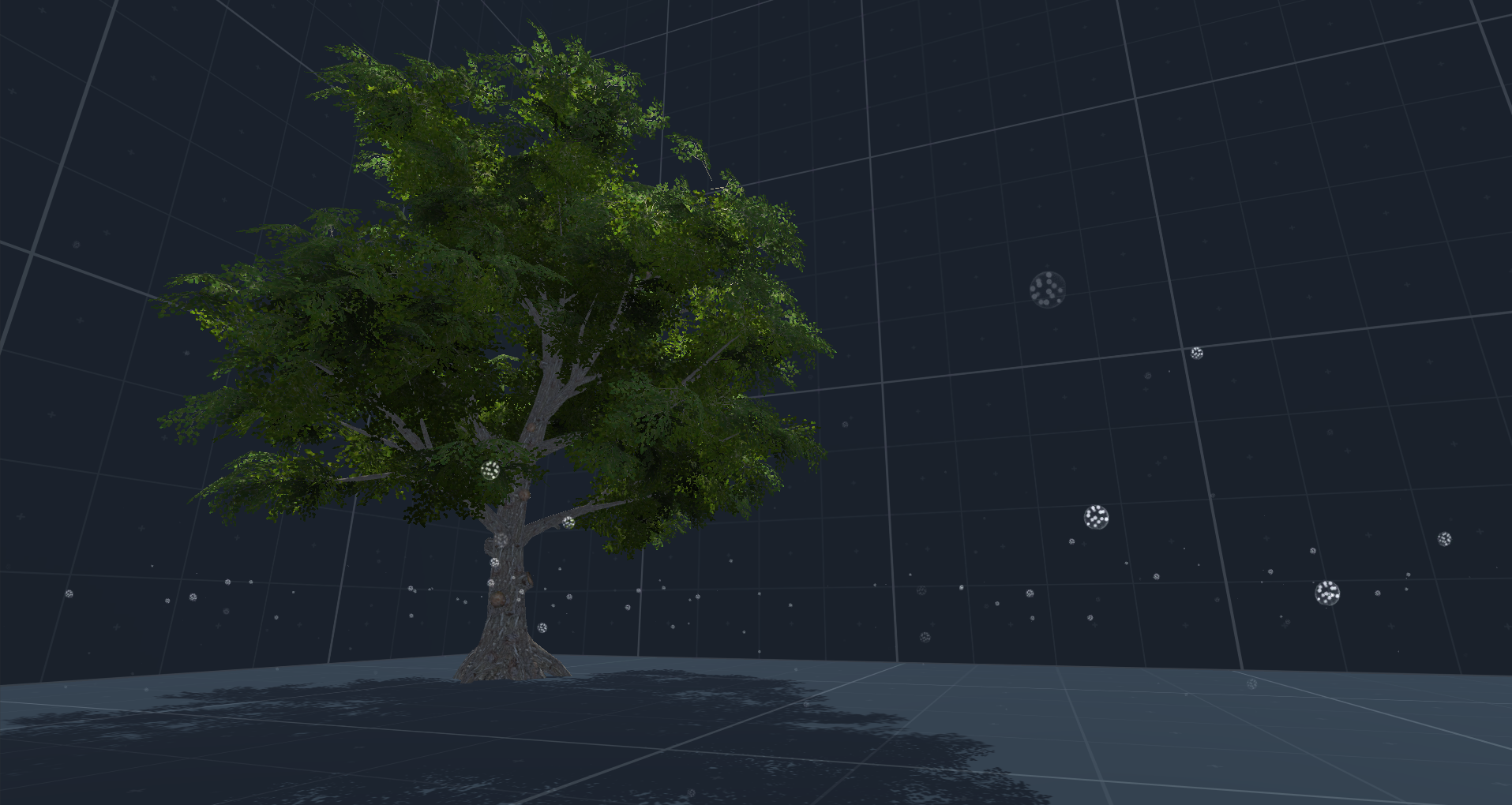

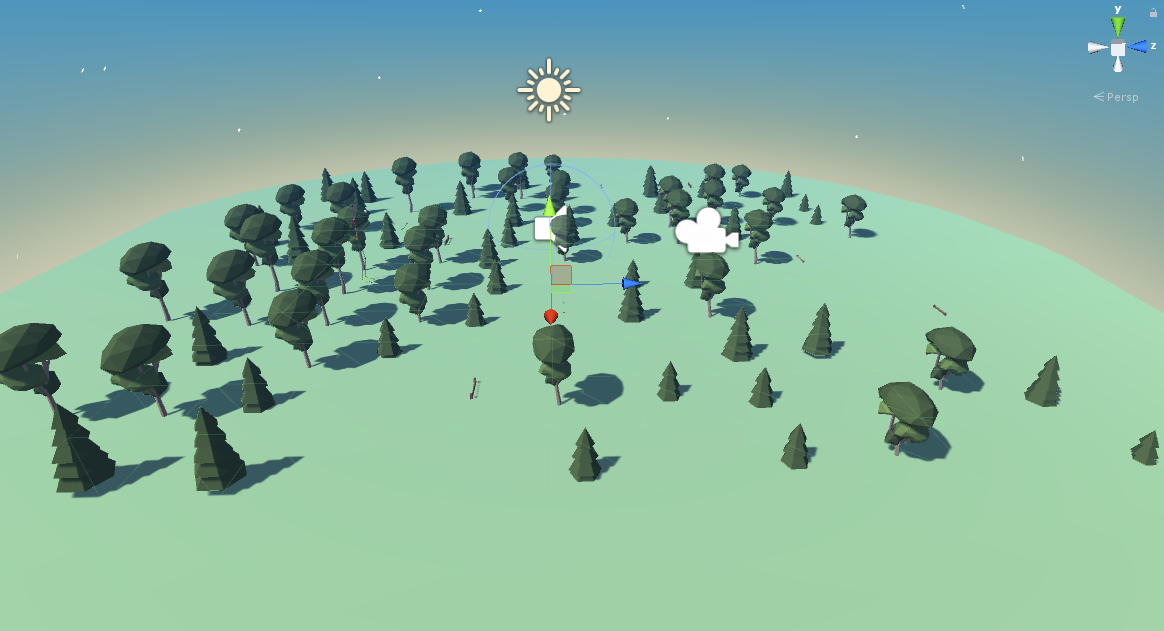

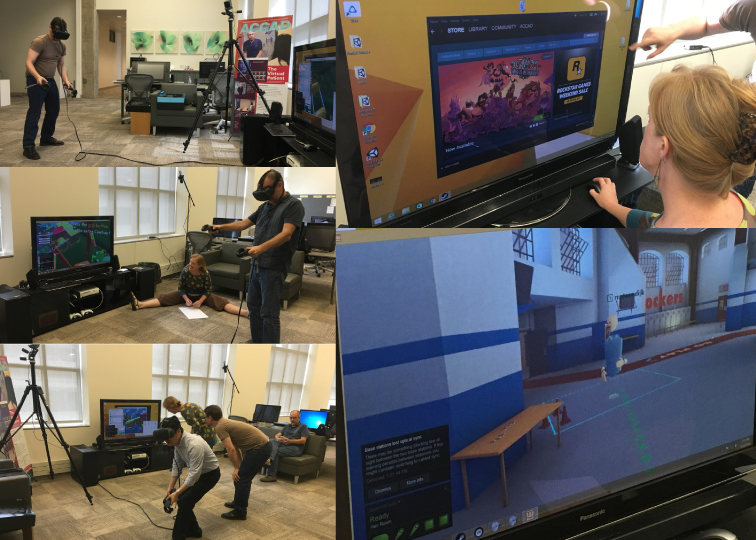

• To explore the VR format (presumably a current interest in use of HMDs with head tracking).

• To explore the embodiment in virtual space; multi-sensory compared with full-body engagement and representation (point-of-view/ gaze).

• To explore the recording of motion (playback, reflection, analysis, of how participants move and engage over time).

• To explore the internal development (starting the process of developing tools for portable templates and future sandboxes created in-house).

• To focus on the user reflecting upon his/her own body as the active element in the space, independent of any encumbrances such as hand-held wands or game controllers.

Disposition / Experience:

• How people are able to physically engage in a virtual space” in interesting, new, creative and/or healthful ways.

• What makes the VR Player do things that are fun to watch as well as fun for them?

• How desired motions could drive the game mechanics such as a desire for people to extend the range of motion, to change levels, to make cross lateral patterns and balance?

• Could additional bodies in space in the VR experience (either inside or outside) create a more interesting learning environment for a viewer / user / player?

• Could you create a dance score with moving objects in the virtual realm?

If so -- what are these objects?

• Who is our intended / ideal Audience? ... How do we want our experience to relate to and possibly change who they are or how they think?

• How can we enhance the experience to make evaluative design decisions within the virtual space?

• How to teach game design through new technologies that are not yet fully realized. (SS)

• How to better navigate the world than using handheld devices?

• Could it be that games are real, and toys are not? ... The context is fiction, but the decisions are real - and lasting.

Reflection / Opportunity:

• VR player as performer...

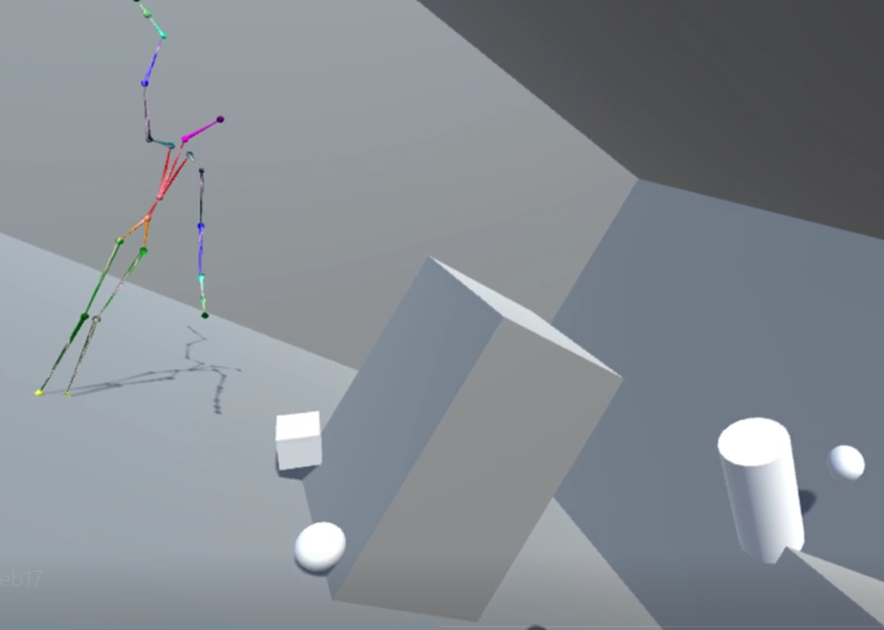

• We felt that interacting with our own recording-motion and the traced-forms made us more aware of their bodies (for better or worse).

• Obviously modeling of any kind is a richer experience in 3D, if I can build in layers and then dimensionally look through them.

• Recording motion was a hit. ... I want to go back in now and try to choreograph those figures.

• We were toying with the idea of human Tetris style game that did not require a lot of space to play and the environment could scale to your available real-world play space.

• It was very interesting for me when I began to think about physical motions as ‘player mechanics’ in a game-related environment.

• The third person perspective and omniscient high viewpoint were of interest.

• I really, really wanted my avatar to be an ‘it’.

• We are interested in play spaces that are physically, socially and creatively engaged.

• I’d like a humanist to help think about narrative and ethical contexts of some of this work and the relationship to post-humanism.

• This VR work that is in conversation with Ghostcatching, a kind of partial reconstruction would be fun.

• I’d like to make a 3d drawing experience that takes IMPROV TECHNOLOGIES into VR.

• I’d like to make something that invites cross lateral motion.

• The big thing I am thinking about is the place of movement qualities in a VR environment and how training a user to engage movement qualities could lead to more empathetic interactions with the world from a renewed understanding of one’s own movement proclivities which inevitably connect to emotions (how do humane technologies work toward that end). I am thinking specifically from the vocabulary associated with the Laban systems for movement qualities.

• I’m considering this balance as to how each medium [movement improvisations and VR generated environments] retains its integrity, but enhances the best traits about the other.... perhaps this ties into the discussion empathy and self/group awareness.

• I am thinking about the followings—the relationship between avatar and player; player driven goals; connections between environments; visual themes; activities; and the external world.