Collaborating faculty Matthew Lewis writes: In a previous blog post I described my introduction to the Humane Technologies project and my intentions for the pop-up week: exploring the use of interactive virtual reality to simulate an Internet of Things (IoT) filled space, with participants embodying the roles of the communicating smart objects inhabiting the environment. Leading up to the big week, I met with several of the participating faculty who gave me invaluable suggestions for additional readings, relevant pop culture references, and other perspectives on possible "motivations" for the IoT devices to be simulated in the project.

During the pop-up week Professor Michelle Wibbelsman and I met with Professor Hannah Kosstrin's dance class and explained the basic idea of the project. Michelle and I had come up with a few exercises/scores with different emphases for the students to try out. For example, we initially split the students into two groups, and requested that one group take a dystopian perspective of IoT devices, while the other group imagine a more utopian viewpoint. While the devices in the later group focussed on keeping the apartment inhabitant happy and comfortable, the former group embodied more of an overbearing nanny/salesperson space. For the initial round, we had requested that the performers communicate primarily via motion. There was a strong tendency however to want to speak primarily to the person in VR and communicate in general via anthropocentric means. For the next round we requested that communication only be through movement, and primarily between the IoT devices, rather than focussing on communicating with the the apartment's inhabitant. Additionally we asked some performers to take on the roles of aspects of the communications infrastructure: one dancer was "Wi-fi" and others were "messages" traveling through the network, between the devices.

There was very little time given for planning between each performance/simulation, so most of the systems and processes resulting were improvised during each performance. As a result very little actual motion-based successful communication took place (though lots of attempts were made.) However these sort of initial experiments using no technology in the classroom gave us a great deal of information and discussion points for our technology-based experiences a couple of days later.

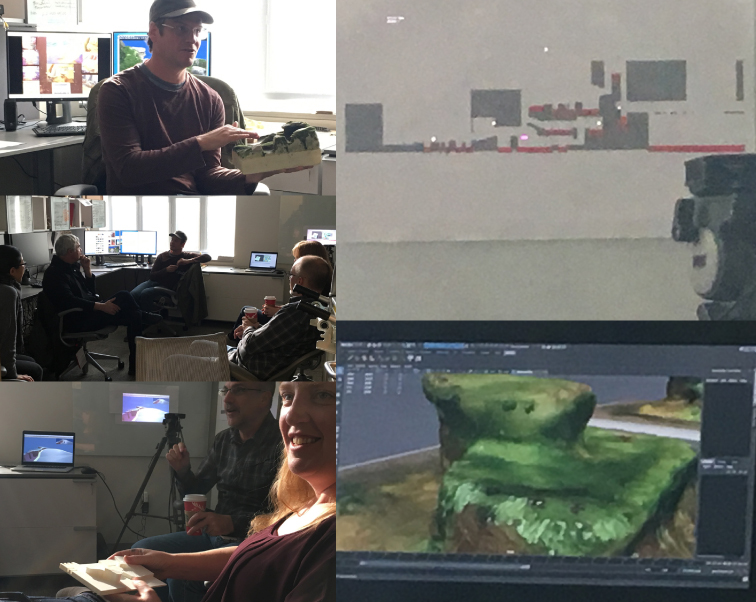

Several people were involved in the implementation of the quickly assembled technological system. I initially had specified the desired system features and set up the physical system components. Skylar Wurster (Computer Science undergrad) and Dr. J Eisenmann (ACCAD alumnus / Adobe research) implemented the interaction and control scripts in the Unity realtime 3D environment. Kien Hoang (ACCAD Design grad) assembled a 3D virtual apartment for the VR environment.

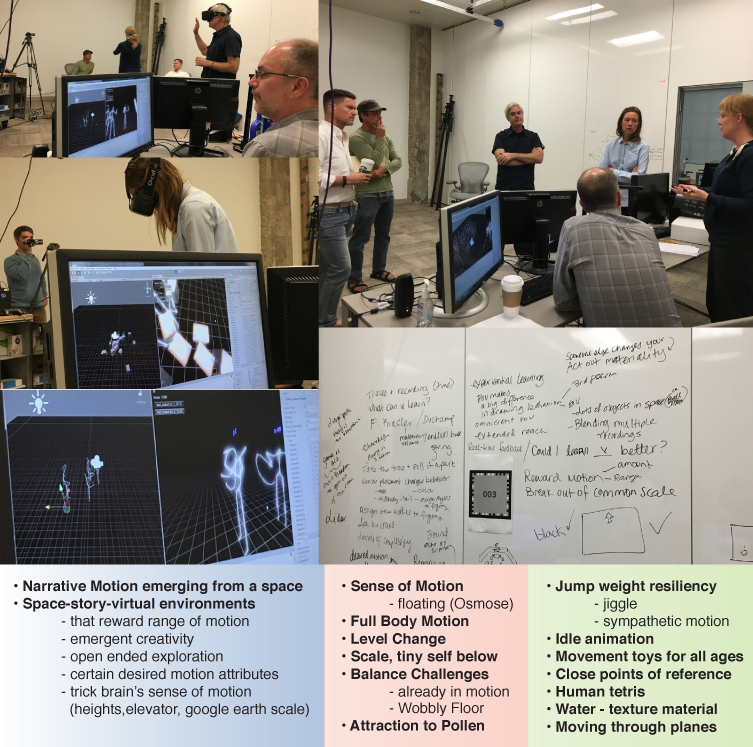

Professor Kosstrin participated in the role of the inhabitant of the VR apartment. At Professor Wibbelsman's suggestion we avoided naming this character so as to avoid too strongly biasing our notions of their role (e.g. "owner", "user", "person", "human", "human object", etc.) We ended up frequently making a stick figure gesture mid-sentence to refer to them during our discussions. It was intended that as the physical performers were communicating outside of VR, there would be some indication inside VR that the virtual smart objects were talking to one another. A few visual options were implemented in the system: the objects could move (e.g. briefly "hopping" a small amount), they could glow, or they could transmit spheres between one another, like throwing a ball. Given the motion-based communications we were attempting with the dancers, I chose to use primarily the movement method to show the VR appliances communicating. This was implemented with a slight delay: if the smart chair was going to send a message to the smart TV, first the chair would move, then the TV would move, as if in response. I imagined this being perceived like someone waving or signaling, followed by the message recipient responding by waving back.

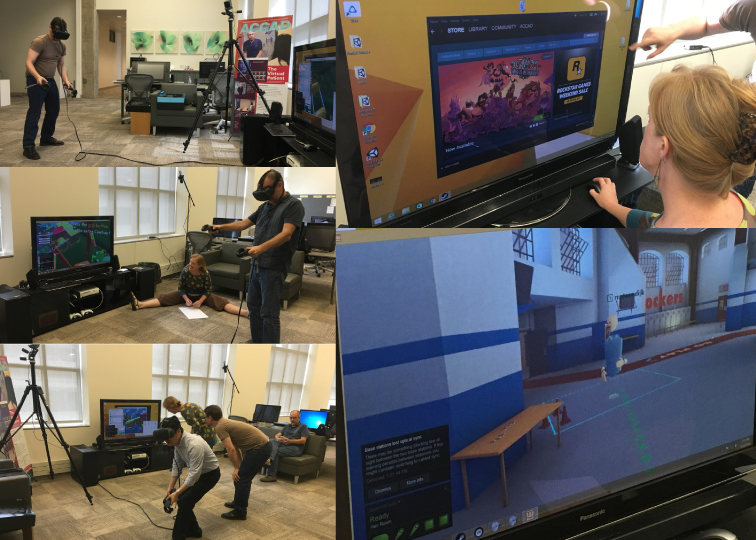

We investigated two methods for connecting communications in the physical and virtual worlds. In our first trials, we simply relied on an indirect puppetry approach. A student at a workstation (Skylar) watched the dancers, and when one started communicating to another, he would press an appropriate keyboard button to trigger the communication animation in the virtual world. For one of the later runs, Ben Schroeder (ACCAD alumnus / Google research), Jonathan Welch (ACCAD Design grad), and Isla Hansen (ACCAD Art faculty) all contributed solutions to enable the dancers to touch a wire to trigger a communication. While this had the advantage of allowing direct control for the performers of their virtual counterparts, the downside was, it placed limitations on their movement possibilities. Regardless, inside VR, the movement of the appliances did not read for our VR participant as communication: "Why is the refrigerator hopping?" Time during the brief session didn't allow for experimentation with the other communication animation approaches, but I suspect some of the other modes might have fared better.

Professor Wibbelsman led the group in discussion and we quickly discovered that our goal of eliciting new ideas about future possibilities for these emerging technologies seemed to be a success: everyone had a great deal of strong opinions about what might emerge and big questions about what they might be more or less comfortable with. One further practical consideration that emerged was the need for dancers to use a separate "narration" voice to communicate with the person in VR, to tell them things they needed to pretend were happening in VR as the improvisation ran its course (e.g. a refrigerator door opening and giving them access to ice cream.) Despite the pop-up providing an invaluable week of time for everyone to focus on prototyping projects such as these, one of the more surprising challenges was having access to people's time. Many of the details of the project were not the result of well considered design decisions but rather because that was what the person who popped-up to work for an hour or two could accomplish before jumping back out to a different project.